In less calamitous times the latest breakthrough in the world of Artificial Intelligence would have made headlines around the world. The latest versions of text-to-image systems (DALL-E 2, Midjourney, Stable Diffusion) are a historic point of departure from what computers could do until now. They all seem to have finally crossed the chasm from being super interesting toys to incredibly powerful tools.

All the new systems work in roughly the same way: they allow the user to prompt in plain English (“a book cover for Frankenstein”) and create an image of that description in seconds. The underlying algorithm(s) are trained on hundreds of millions of images ingested from public sources. While that does not sound like a recipe for a creative output, it, in fact, is. And the success of a new, so called “deep learning” algorithms has made this creative element possible.

At the moment, the words we routinely use to talk about software functionality do not fully capture just how surprisingly generalised they really are. And all that combined with remarkable speed and super high-quality rendering. It is therefore perhaps best to evaluate the output as evidence.

For the purposes of testing I thought to make a book cover for Frankenstein would be the right subject. My own first instinct was to explore how artists, whose styles we are very familiar with, would paint the book cover. The top image on this article is what Midjourney thought Michelangelo would have turned around.

Here is what it thought Frida Kahlo would do

Henri Matisse

Andy Warhol

Midjourney even took a crack at the recently discovered abstract painter Hilma af Klint. This is what it made. Since it never says no and cannot explain itself it is hard to see the connection to Frankenstein.

A pencil sketch by Leonardo Da Vinci is more convincing

It is clear that the AI/machine learning system has abstracted the styles of great artists from the gigantic data set. This in itself is not surprising and some version of this feature have been around for years. Most often in the many popular photo filter apps. What is noteworthy is that the creations are often not taking off merely from the most frequently seen visualization of Frankenstein by the actor Boris Karloff. The echo of that work is in most of the images in this series. However that’s partially my choice to make comparisons easier. It’s not a system limitation. As reference here is a rendering of that image (notice the fine pencil work by Midjourney)

Not only does creatively mimicking well-known painters work well, so does the more subtle task of machine learning the style of photographers. Here is Frankenstein photographed by Henri Cartier Bresson.

As an Annie Leibovitz “glamor portrait”

More surprisingly, Midjourney imagined Frankenstein as a miner when asked for a portrait by Sebastian Salgado. He is widely known for images of men and women at work in difficult environments.

Artists, art experts of any kind, or those belonging to the exacting world of print publishing will probably be skeptical. For one, these are all good approximations but one can find many a flaw all the way from the often very literal interpretation of “style” to the imperfections in the rendering. What’s more, all of this is derivative, ultimately dependent on the originality of artists not algorithms. For the skeptics, it’s important to to remember that this is merely version 2 of these systems. They will improve and eventually diverge far away from their human roots. Just like other AI systems have in chess and Go. At first the game playing systems trained by studying the games of grandmaster but now they train and learn by pitting machines against machines.

Also, even now there is far more to the new AI. In fact, its ability to create never visualized scenario and compositions is what sets it apart. This makes these one of the first class of systems for which the phrase “artificial intelligence” might make sense - even if merely as an extreme case of ‘competence without comprehension’.

While Midjourney does not parse text as accurately as an artist might wield a brush, it is clearly only a matter of time. Here are some experiments to do the type of graphic artists are often asked to. When told to make Frankenstein from colorful wool

Frankenstein from an arrangement of chopped fruits and vegetables

Using neon tubes

With an arrangement of colorful pebbles

Frankenstein as a lion

A chimpanzee Frankenstein

As a claymation puppet

The big news here is not just how original the system is but the fact that the output is clearly useful. In fact if you are a publisher or in the advertising industry or an independent writer/creator it’s the dawn of a complete change in workflow. It takes minutes to visualize options that would takes weeks or months. These images took me less than a week of knowing the system and then only a few hours to generate. Most of it wasted time on going down avoidable rabbit holes.

For professional and practical use however a few big pieces are missing. For example, the ability to reuse a character for telling a story. If I want to take say the lion-Frankenstein and use that character design for generating other still positions or animation that doesn’t work well as of now.

More alarmingly for all of us, it is likely that creating synthetic images with ease might prove as addictive as taking selfies on mobile phones. And eventually users will flood the internet with entirely new kind of images and videos.

However, that might take a bit longer. The AI systems are not yet free to use and nor are they connected to big social media for the dopamine hit of shares/likes. Also there is a big battle looming on the copyright for styles that fed the AI in the first place. Another roadblock is that we will need new rules governing synthetic images of celebrities and other public figures without their explicit permission. Old free speech conventions governing cartoon caricatures and memes are unlikely to survive super realistic looking AI images.

The already vitiated public sphere faces a new test from synthetic fakes. Here is a made up photo of Mary Shelley who wrote Frankenstein or The Modern Prometheus at the age of nineteen in 1818. I asked Midjourney for a Cartier Bresson portrait “using natural light, with a Leica.”

It’s inevitable that this powerful capability will lead to ever more quantities of wild yet believable fake images. Anyone familiar with the abysmal level of internet discourse today will shudder to even think of an Instagram or TikTok feed with this type of tool built-in.

For now, we are still in the age of innocence of AI generated art. In this fleeting moment we can perhaps ignore problems that are around the corner. Instead we can marvel at the emergence of a foundational new tool for translating human thought into images.

Part 2 in this series on AI generated images

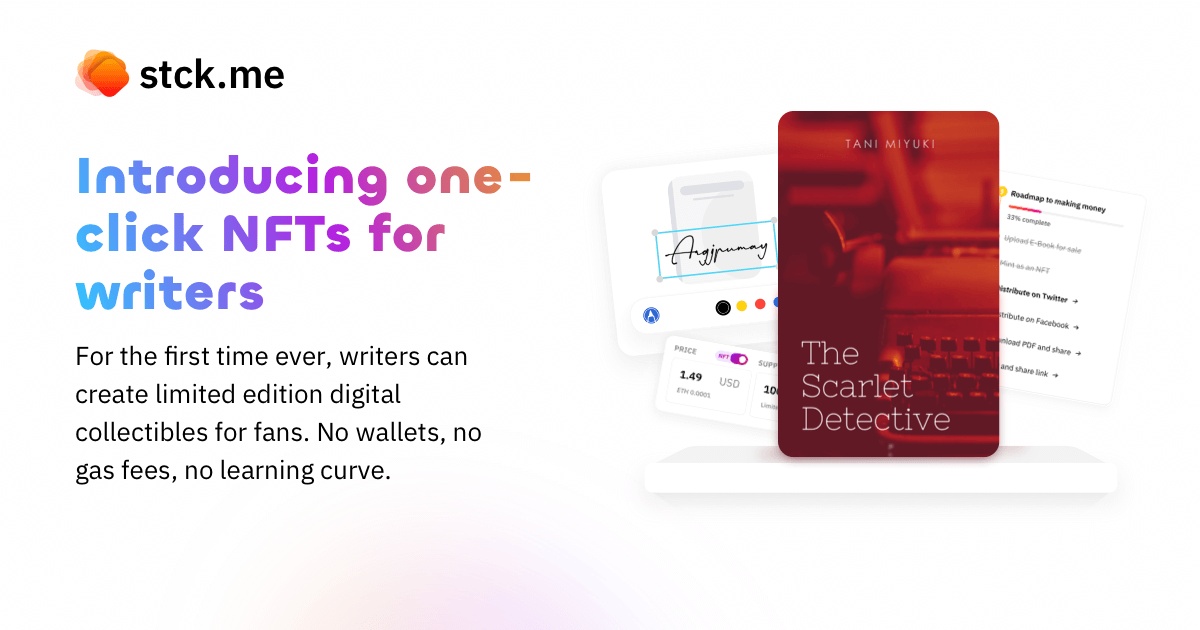

PS : If you are a writer who is intrigued by AI art or NFTs connect with us here

Write a comment ...